Ensuring the safety and security of your data in Azure DevOps is crucial for the smooth operations of any organization. Microsoft, the provider of Azure DevOps, emphasizes the importance of backing up your data to prevent any potential loss or disruptions. Here are some key reasons why organizations should prioritize backing up their Azure DevOps data.

1. Microsoft’s Service Agreement

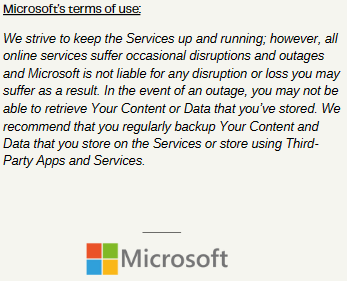

In the Customer Service Agreement, Microsoft explicitly advises customers to regularly back up their content and data stored on the Services using third-party applications and services. This proactive approach can help mitigate risks associated with data loss.

Microsoft Terms of Use: “We recommend that you regularly backup Your Content and Data that you store on the Services using Third-Party Apps and Services.”

2. Shared Responsibility Model

Microsoft operates on a Shared Responsibility Model, where both the provider and the customer have specific responsibilities. While Microsoft ensures the security of the service and infrastructure, customers are accountable for safeguarding their data and accounts. Understanding and fulfilling these obligations is essential for maintaining a secure environment.

Azure DevOps is hosted entirely on Microsoft Azure and is subject to the Microsoft Azure Shared Responsibility model.

Microsoft themselves stipulate, “for all cloud deployment types, you own your data and identities. You are responsible for protecting the security of your data and identities”.

“Whatever your approach, you should consider all data potentially “at risk”, no matter where it is or how it is being used. This is true for both data in the cloud as well as data stored in a private data center.”

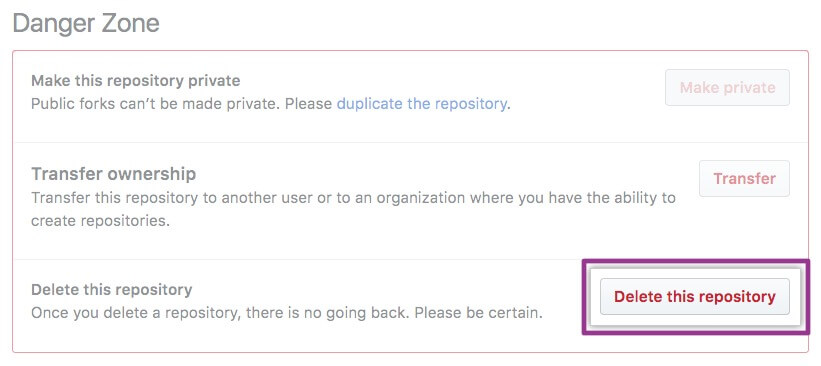

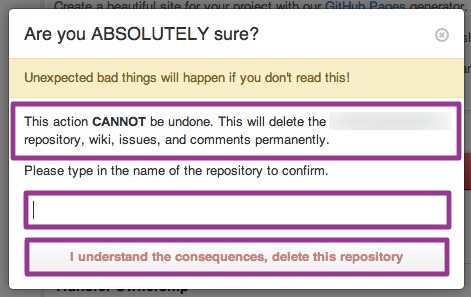

3. Data Deletion

Deleted data in Azure DevOps may not be retrievable indefinitely. Microsoft cautions users that data may be permanently removed after a certain period. To avoid accidental data loss, it is recommended to back up your data consistently.

Microsoft openly warns, “Remember, we might get rid of data for good after it’s been deleted for a certain time. Always back up your data to avoid losing it by accident.”

4. Microsoft 28-day Recovery Timeframe

Microsoft enforces a 28-day policy for recovering deleted files from projects. Beyond this period, data may be irreversibly lost if not promptly addressed. Timely backups can serve as a safety net in such scenarios.

5. Organization-wide Restoration

In the event of data loss, Microsoft offers restoration at the organizational level rather than individual file recovery. Understanding this approach can influence the backup strategies implemented by organizations.

6. Support Response Time

Given Microsoft’s vast customer base, support response times can vary, days to weeks. Delays in addressing data loss incidents impact business continuity. Proactive data backups will reduce dependency on external support for data recovery.

7. Outage Concerns

During service outages, retrieving stored content or data from Azure DevOps may not be feasible. This highlights the significance of having independent backups to maintain access to critical information.

Microsoft advice on outages in their documentation “In the event of an outage, you may not be able to retrieve Your Content or Data that you’ve stored.”

Conclusion

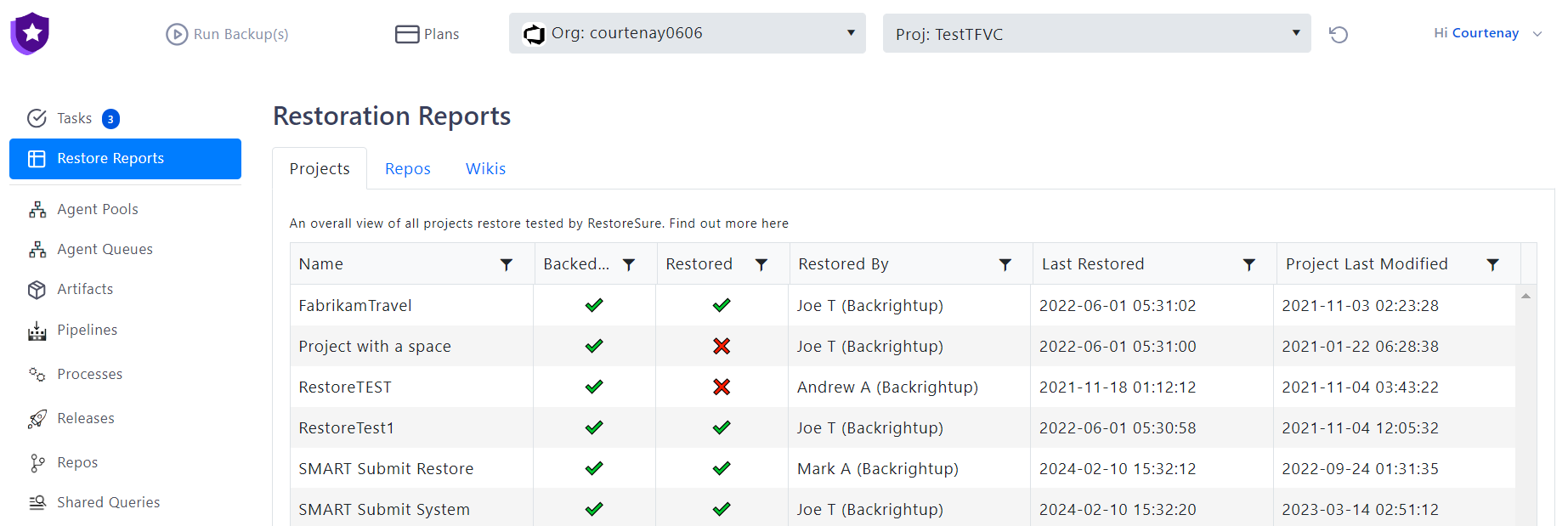

Microsoft encourages to explore reliable backup solutions for Azure DevOps. Backrightup offers global support for protecting Azure DevOps data, addressing issues for customers that are not be publicly disclosed but are prevalent in daily operations. Incidents that we hear of daily, such as project data deletion by a Financial Insurer employee and having to wait days before this organisation managed to get the right support. These incidents occur often and underscore the importance of organisations to be proactive in their own data protection measures.

By heeding Microsoft’s advice on data backup and leveraging third-party solution like Backrightup, organizations can mitigate risks to business continuity and safeguard their intellectual property stored in Azure DevOps.

Microsoft: “We recommend that you regularly backup Your Content and Data that you store on the Services using Third-Party Apps and Services,”.

If Microsoft advises their customers to back up their data with third party solutions to minimize the risks of business continuity, and there’s inexpensive solutions, verified by Microsoft, which protects critical data, what are you waiting for? – Contact Backrightup.com for an inexpensive enterprise-grade solution, used by likes of Assurant, Nuvei, Allianz, Isuzu, Department of Defense, Telstra to name a few, that ensures quick and seamless data protection.